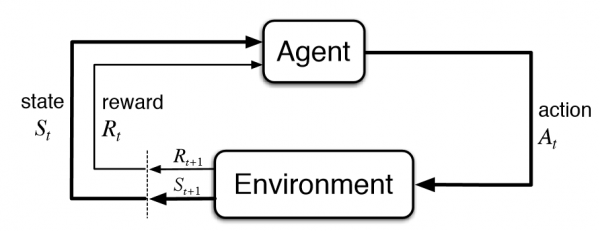

Reinforcement learning is a type of machine learning that is used for training agents to make decisions by trial and error. It is based on the idea of an agent interacting with its environment and learning from the rewards or punishments it receives for its actions. The goal of reinforcement learning is to maximize the cumulative reward obtained by the agent over time.

The process of reinforcement learning starts with an agent in an environment, which can be real or simulated. The agent takes an action in the environment and receives a reward or punishment based on the outcome of its action. The agent then updates its policy, which is the rule that determines its actions based on its current state. This process is repeated many times, with the agent learning from its experiences and improving its policy over time.

One of the key components of reinforcement learning is the reward function, which is used to evaluate the performance of the agent. The reward function can be defined in different ways, depending on the task the agent is trying to solve. For example, in a game of chess, the reward function could be defined as the number of pieces captured or the amount of control over the board.

Another important concept in reinforcement learning is exploration vs. exploitation. Exploration refers to the agent trying out new actions to learn more about the environment, while exploitation refers to the agent taking actions that are known to be successful based on its past experience.

One popular algorithm for reinforcement learning is Q-learning, which involves updating the values of the actions based on the expected reward of each action. Another popular algorithm is policy gradient methods, which involve directly optimizing the policy of the agent.

Reinforcement learning has been applied in many areas, including game playing, robotics, and autonomous vehicles. For example, reinforcement learning has been used to train agents to play games such as Go and poker at a superhuman level. In robotics, reinforcement learning has been used to train robots to perform tasks such as grasping objects and navigating environments. In autonomous vehicles, reinforcement learning has been used to train vehicles to make safe and efficient driving decisions.

OpenAI

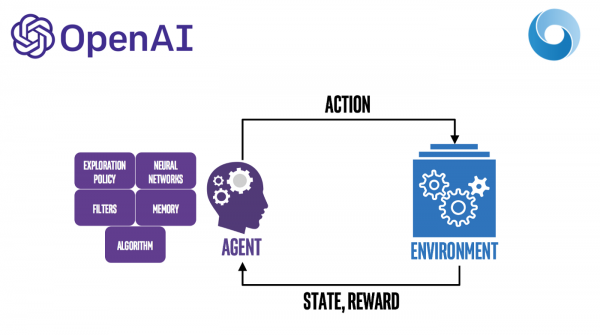

Reinforcement learning is a subfield of artificial intelligence that focuses on developing algorithms that enable an agent to learn by interacting with its environment. OpenAI is an organization that is dedicated to advancing the field of artificial intelligence by promoting collaboration and providing resources for researchers and developers. In this article, we will explore the role of OpenAI in the development of reinforcement learning algorithms.

OpenAI has been a major contributor to the field of reinforcement learning, with a particular focus on developing deep reinforcement learning algorithms. Deep reinforcement learning algorithms use neural networks to approximate the value of different actions in a given state. This approach has been particularly successful in solving complex problems such as playing games, controlling robots, and optimizing resource allocation.

One of the most well-known applications of reinforcement learning developed by OpenAI is the OpenAI Five, a team of AI agents that can play the game Dota 2 at a professional level. The agents were trained using deep reinforcement learning algorithms, and they were able to defeat some of the world's best human players. This achievement is particularly impressive because Dota 2 is a complex game with a large action space and many possible strategies.

OpenAI has also developed algorithms for controlling robotic arms and hands, allowing them to perform complex tasks such as manipulating objects and assembling products. These algorithms use deep reinforcement learning to learn how to perform these tasks through trial and error.

In addition to developing new algorithms, OpenAI also provides resources for researchers and developers working on reinforcement learning. One of the most notable resources is the OpenAI Gym, a toolkit for developing and comparing reinforcement learning algorithms. The Gym includes a wide variety of environments, such as Atari games and robotic simulations, that researchers can use to test their algorithms.

Another resource provided by OpenAI is the OpenAI RoboSumo competition, a reinforcement learning competition that challenges participants to develop algorithms for controlling robots in a sumo wrestling match. The competition is designed to promote collaboration and innovation in the field of reinforcement learning.

In conclusion, OpenAI has played a significant role in the development of reinforcement learning algorithms, particularly in the area of deep reinforcement learning. The organization has also provided resources for researchers and developers working on reinforcement learning, which has helped to advance the field and promote collaboration. As reinforcement learning continues to grow in importance, we can expect OpenAI to continue to play a leading role in its development.

Uses

Here are 5 uses of reinforcement learning:

1- Robotics: Reinforcement learning can be used to train robots to perform specific tasks. For example, reinforcement learning algorithms have been used to teach robots how to navigate mazes, manipulate objects, and even play games.

2- Gaming: Reinforcement learning has been used to create intelligent agents for playing games like chess, Go, and poker. These agents are able to learn from their mistakes and improve their gameplay over time.

3- Autonomous Vehicles: Reinforcement learning is being used to develop autonomous vehicles that can learn to drive on their own. These vehicles are able to use reinforcement learning algorithms to navigate traffic, avoid obstacles, and make safe driving decisions.

4- Marketing: Reinforcement learning is used in marketing to optimize campaigns and improve conversion rates. These algorithms can analyze user behavior and feedback to determine the best course of action for maximizing engagement and sales.

5- Healthcare: Reinforcement learning is being explored as a tool for personalizing medical treatments. By analyzing patient data, reinforcement learning algorithms can determine the most effective treatments for specific patients, resulting in improved health outcomes.

Real-Life Applications

here are five real-world applications that have used reinforcement learning:

1- AlphaGo: In 2016, DeepMind's AlphaGo program used reinforcement learning to defeat a world champion at the game of Go, a notoriously difficult strategy game. The program used deep neural networks and Monte Carlo tree search to evaluate and improve its play over time.

2- Robotics: Reinforcement learning has been applied to robotics to enable robots to learn how to perform complex tasks. For example, researchers at the University of California, Berkeley used reinforcement learning to teach a robot to grasp objects in cluttered environments.

3- Self-driving cars: Reinforcement learning has been applied to self-driving cars to help them learn how to navigate complex environments. For example, researchers at NVIDIA used reinforcement learning to train a self-driving car to safely navigate through urban environments.

4- Healthcare: Reinforcement learning has been applied to healthcare to help doctors make more informed decisions about treatments. For example, researchers at Stanford University used reinforcement learning to develop a personalized treatment recommendation system for sepsis, a life-threatening condition.

5- Video games: Reinforcement learning has been applied to video games to help AI agents learn how to play them. For example, OpenAI used reinforcement learning to train an AI agent to play the game Dota 2 at a professional level.

Code Example (Q-learning)

Here's an example code for implementing reinforcement learning using the Q-learning algorithm in Python:

# Define the environment

n_states = 6

n_actions = 2

rewards = np.array([[0, 0], [1, 0], [0, 1], [1, 1], [0, 0], [0, 0]])

transition_probabilities = np.array(

[[[0.7, 0.3], [0.9, 0.1]],

[[0.4, 0.6], [0.5, 0.5]],

[[0.3, 0.7], [0.6, 0.4]],

[[0.1, 0.9], [0.2, 0.8]],

[[0.8, 0.2], [0.4, 0.6]],

[[0.0, 1.0], [0.0, 1.0]]]

)

# Define the Q-learning algorithm

def q_learning(rewards, transition_probabilities, n_states, n_actions, alpha=0.1, gamma=0.95, epsilon=0.1, n_episodes=100):

Q = np.zeros((n_states, n_actions))

for episode in range(n_episodes):

state = np.random.randint(0, n_states)

while True:

# Choose an action using epsilon-greedy strategy

if np.random.rand() < epsilon:

action = np.random.randint(0, n_actions)

else:

action = np.argmax(Q[state])

# Take the action and observe the next state and reward

next_state = np.random.choice(range(n_states), p=transition_probabilities[state, action])

reward = rewards[state, action]

# Update the Q-value

Q[state, action] += alpha * (reward + gamma * np.max(Q[next_state]) - Q[state, action])

# Move to the next state

state = next_state

# Check if the episode is done

if state == n_states - 1:

break

return Q

# Train the agent using the Q-learning algorithm

Q = q_learning(rewards, transition_probabilities, n_states, n_actions)

# Print the learned Q-values

print(Q)

In this example, we define a simple environment with six states and two actions. The rewards and transition probabilities are specified as arrays. We then define the Q-learning algorithm using the q_learning function, which takes as input the rewards, transition probabilities, number of states, number of actions, and hyperparameters such as the learning rate (alpha), discount factor (gamma), and exploration rate (epsilon). The Q matrix is initialized to zeros and is updated during training using the Q-learning update rule. Finally, the learned Q-values are printed.

Code Example (OpenAI)

Here's an example of reinforcement learning code using OpenAI Gym and the Deep Q-Network (DQN) algorithm:

import gym

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

from keras.optimizers import Adam

from collections import deque

import random

env = gym.make('CartPole-v0')

# Define the deep Q-network model

model = Sequential()

model.add(Dense(24, input_dim=env.observation_space.shape[0], activation='relu'))

model.add(Dense(24, activation='relu'))

model.add(Dense(env.action_space.n, activation='linear'))

model.compile(loss='mse', optimizer=Adam(lr=0.001))

# Define the replay buffer

replay_buffer = deque(maxlen=10000)

# Define the hyperparameters

batch_size = 32

gamma = 0.95

epsilon = 1.0

epsilon_decay = 0.995

epsilon_min = 0.01

# Define the main training loop

for episode in range(1000):

state = env.reset()

state = np.reshape(state, [1, env.observation_space.shape[0]])

done = False

total_reward = 0

while not done:

# Choose an action based on the epsilon-greedy policy

if np.random.rand() <= epsilon:

action = env.action_space.sample()

else:

q_values = model.predict(state)

action = np.argmax(q_values[0])

# Take the chosen action and observe the reward and next state

next_state, reward, done, _ = env.step(action)

next_state = np.reshape(next_state, [1, env.observation_space.shape[0]])

# Store the transition in the replay buffer

replay_buffer.append((state, action, reward, next_state, done))

state = next_state

# Train the model on a random batch of transitions from the replay buffer

if len(replay_buffer) >= batch_size:

batch = random.sample(replay_buffer, batch_size)

for state, action, reward, next_state, done in batch:

target = reward

if not done:

target = reward + gamma * np.amax(model.predict(next_state)[0])

q_values = model.predict(state)

q_values[0][action] = target

model.fit(state, q_values, verbose=0)

total_reward += reward

# Decay the exploration rate after each episode

if epsilon > epsilon_min:

epsilon *= epsilon_decay

# Print the results for each episode

print("Episode: {}, Total reward: {}, Epsilon: {:.2f}".format(episode+1, total_reward, epsilon))

# Close the environment

env.close()

This code uses OpenAI Gym to create the CartPole-v0 environment and trains a deep Q-network model to learn how to balance the pole on the cart. The code implements the DQN algorithm with a replay buffer and an epsilon-greedy exploration strategy. During each episode, the agent selects actions based on the epsilon-greedy policy, takes those actions, observes the reward and next state, stores the transition in the replay buffer, and trains the model on a random batch of transitions from the replay buffer. The exploration rate epsilon decayed after each episode. After training is complete, the code prints the total reward achieved in each episode.

Conclusion

Overall, reinforcement learning is a powerful and flexible approach to machine learning that is well-suited for decision-making problems where there is a clear feedback signal. Its applications are wide-ranging and its potential impact is significant, making it an exciting area of research in artificial intelligence.